- VMware

- VMware vCenter Server (VCSA), VMware vSphere

- 27 November 2024 at 13:43 UTC

-

- 1/2

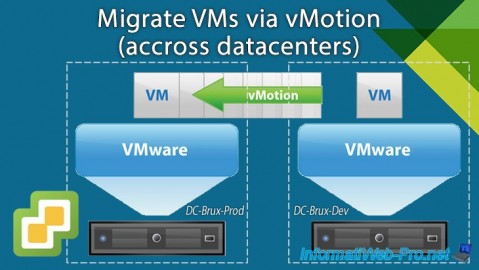

With VMware vSphere vMotion, you can hot or cold migrate virtual machines from one VMware ESXi host to another through the VMware vSphere Client on your VMware vCenter Server (VCSA).

To find out how to configure the prerequisites necessary for vSphere vMotion to operate, as well as best practices for using this feature in the best conditions, refer to our tutorial: Migrate virtual machines (VMs) via vMotion on VMware vSphere 6.7.

In the tutorial cited above, we migrated a virtual machine from one VMware ESXi host to another.

However, these were in the same data center.

In this tutorial, you will see how to migrate a virtual machine between 2 hosts located in different data centers.

- Network configuration

- Test network connectivity between 2 VMkernel vMotion interfaces

- Virtual networks used

- Migrate a virtual machine between 2 data centers

1. Network configuration

For the example, we simulated the use of 2 data centers (one for development and one for production) which are located in the same geographical location (Brussels).

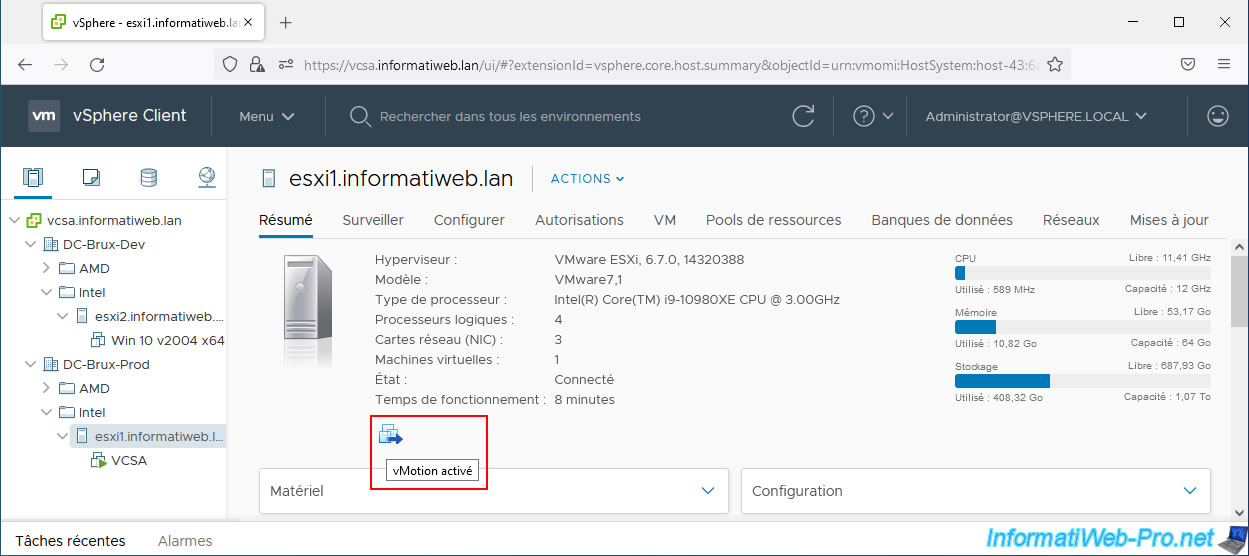

As you can see below, host "esxi1" is in the production datacenter (DC-Brux-Prod) and host "esxi2" is in the development datacenter.

As you can see, in our case we have already enabled the use of vMotion on our VMware ESXi hosts.

If necessary, refer to our vMotion tutorial referenced at the beginning of this tutorial.

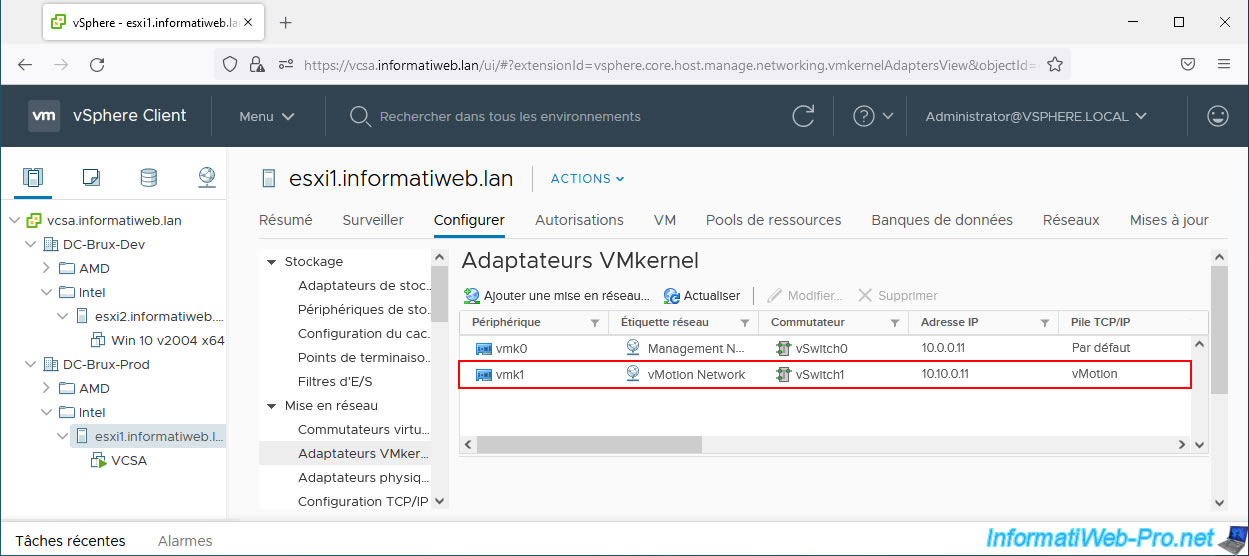

For this tutorial, we created a new adapter "vmk1" which uses:

- a virtual network named: vMotion Network

- a virtual switch named: vSwitch1

- the IP address "10.10.0.11" (which is on a separate network and therefore dedicated to vMotion)

- vMotion TCP/IP stack (available since vSphere 6.0)

To create a new adapter, select your VMware ESXi host and go to: Configure -> Networking -> VMkernel adapters.

Then click: Add Networking.

Note: if necessary, refer to our vMotion tutorial.

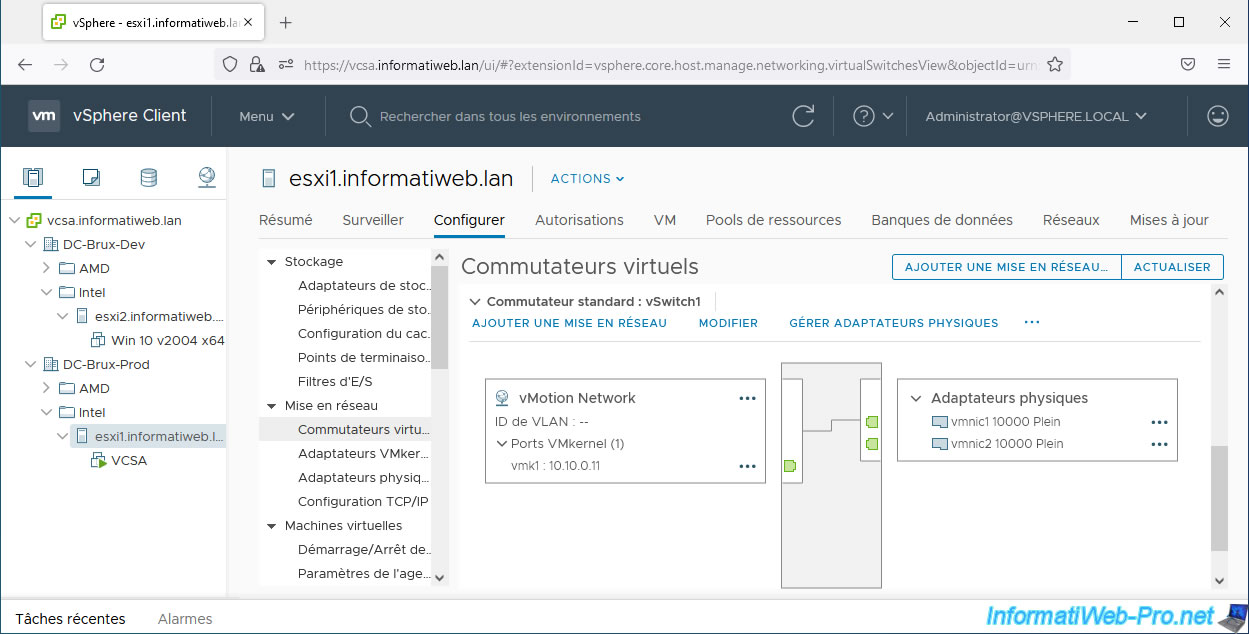

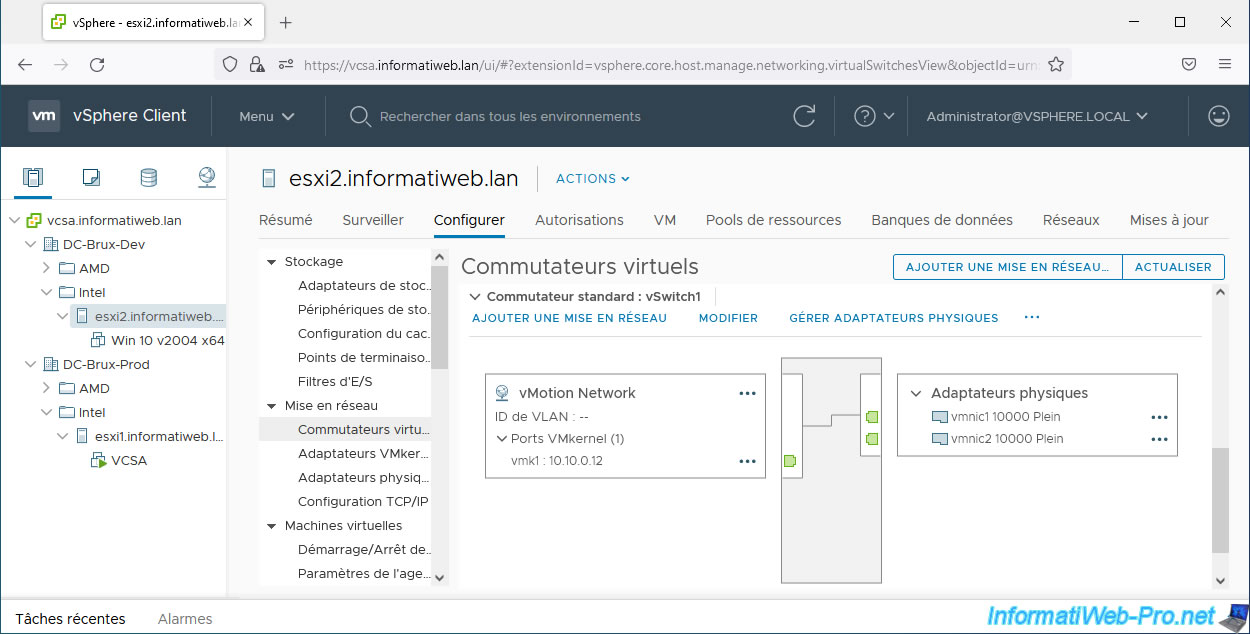

For the virtual switch, you can see again that it is linked to our virtual network "vMotion Network" and the VMkernel interface "vmk1" whose associated IP address is "10.10.0.11".

However, you can also see that 2 physical network cards are associated with this virtual switch.

Technically you could use just one for vMotion. But, if you want to follow the best practices indicated by VMware, you should use at least 2 (one active and the other as a backup network card).

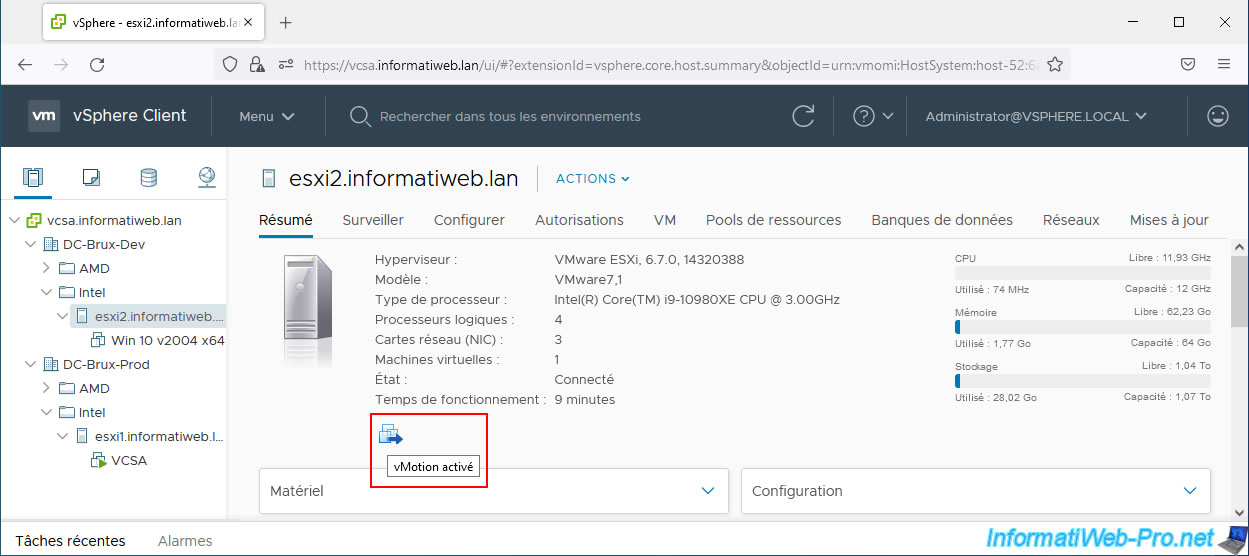

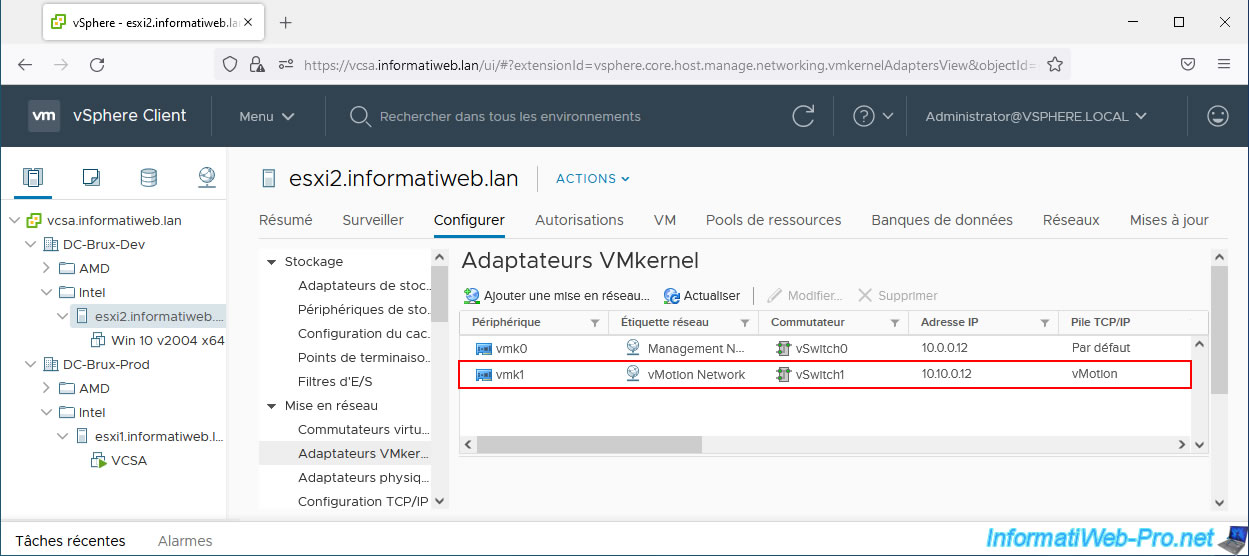

For our 2nd host, vMotion is also enabled.

It also has a VMkernel interface dedicated to vMotion traffic, but its IP address is "10.10.0.12" in this case.

For the virtual switch, everything is identical, except for the IP address of the VMkernel interface "vmk1" dedicated to vMotion which this time has the IP address "10.10.0.12".

2. Test network connectivity between 2 VMkernel vMotion interfaces

Once your VMware ESXi hosts are configured to allow virtual machine migration via vSphere vMotion, we recommend testing network connectivity between them.

To do this, you will need to enable the SSH protocol on the source VMware ESXi host and use the "vmkping" command.

Indeed, unlike the classic "ping" command, the "vmkping" command allows you to test the network connection by specifying:

- which VMkernel interface should be used on the source VMware ESXi host

- which TCP/IP stack should be used

- what is the remote host IP address for the subnet used by this VMkernel interface

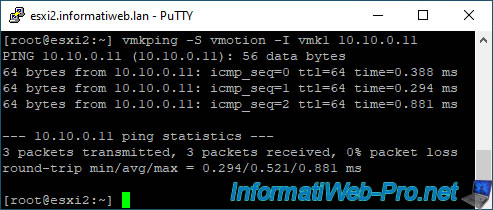

In our case, we will test the connection from our host "esxi2" which is part of the development data center (DC-Brux-Dev).

To test network connectivity in our case, we will use the "vmkping" command with the parameters:

- -S vmotion: indicates that "vmkping" must use the TCP/IP "vMotion" stack

- -I vmk1: indicates that "vmkping" will send network packets through the VMkernel interface "vmk1"

- 10.10.0.11: indicates the IP address of the remote VMware ESXi host on the subnet dedicated to vMotion (in our case)

Batch

vmkping -S vmotion -I vmk1 10.10.0.11

Plain Text

PING 10.10.0.11 (10.10.0.11): 56 data bytes 64 bytes from 10.10.0.11: icmp_seq=0 ttl=64 time=0.388 ms 64 bytes from 10.10.0.11: icmp_seq=1 ttl=64 time=0.294 ms 64 bytes from 10.10.0.11: icmp_seq=2 ttl=64 time=0.881 ms --- 10.10.0.11 ping statistics --- 3 packets transmitted, 3 packets received, 0% packet loss round-trip min/avg/max = 0.294/0.521/0.881 ms

As expected, the sent packets (3 packets transmitted) arrived correctly on the remote host and 3 packets were received.

If an error occurs, refer to our vMotion tutorial for more explanations of the various errors you may have made and to troubleshoot various issues.

3. Virtual networks used

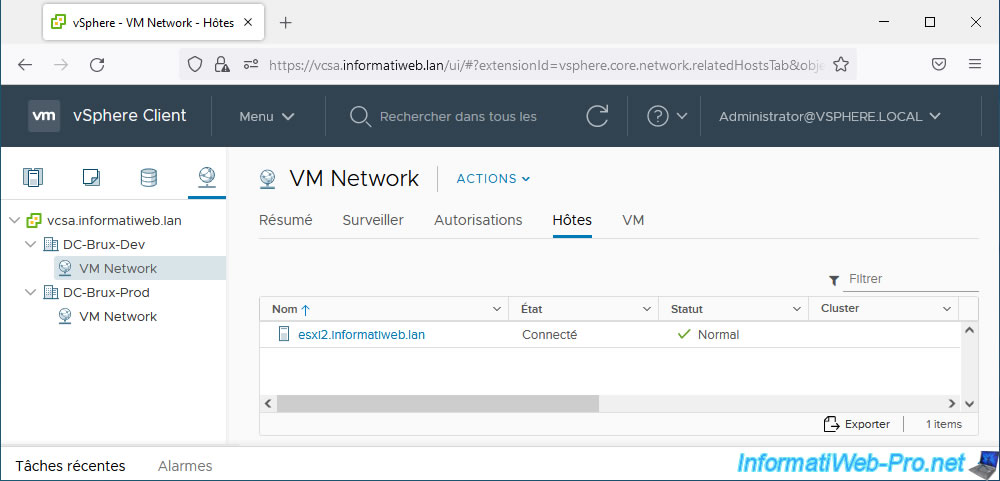

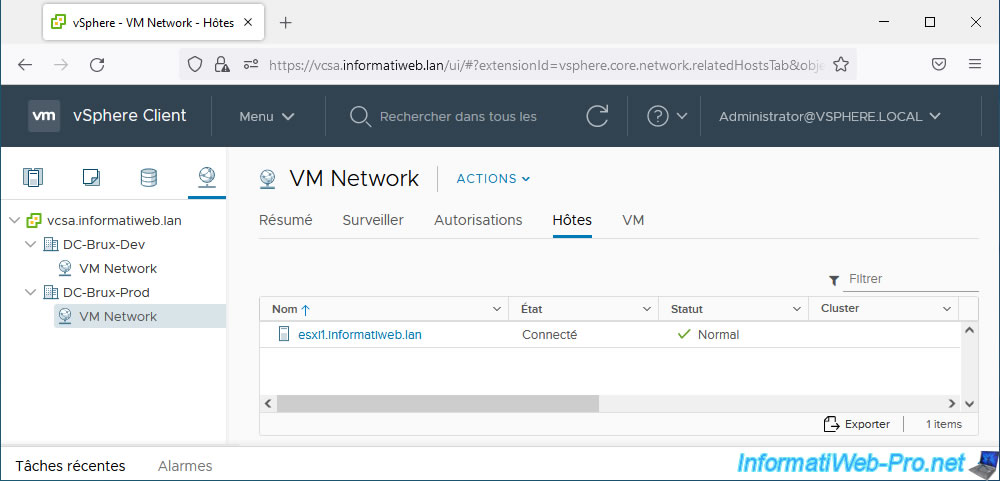

In our case, we use the same virtual network on our 2 data centers (DC-Brux-Dev and DC-Brux-Prod).

However, you will see that from a technical point of view, these virtual networks are only considered unique within a data center. In this case, VMware vCenter Server therefore considers that these are 2 different virtual networks. Hence the fact that it appears twice in the list on the left.

Share this tutorial

To see also

-

VMware 10/19/2022

VMware ESXi 6.7 - DirectPath I/O (PCI passthrough)

-

VMware 2/3/2023

VMware ESXi 6.7 - Use an USB 3.0/3.1 controller with Win 7

-

VMware 11/23/2022

VMware ESXi 6.7 - VMRC console (VMware Remote Console) presentation

-

VMware 7/31/2024

VMware vSphere 6.7 - Add an Active Directory identity source

You must be logged in to post a comment