- VMware

- 07 April 2023 at 08:33 UTC

-

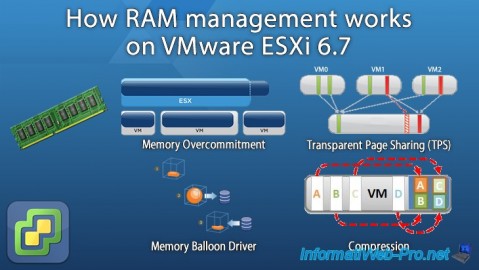

VMware ESXi is a hypervisor that efficiently manages the use of your system resources (RAM, CPU, ...).

VMware ESXi has several mechanisms to manage memory efficiently and to reclaim memory when needed.

In the rest of this article, you will be able to understand different notions and the different mechanisms that allow VMware ESXi to make the best use of your physical RAM and to recover RAM when necessary :

- Memory Overcommitment : ability to allocate more RAM to your VMs than the amount of physical RAM available on your VMware ESXi host.

- Transparent Page Sharing (TPS) : allows multiple virtual machines to share the same memory pages to save RAM.

- Ballooning driver : allows you to recover RAM by recovering memory pages not used by your virtual machines.

- Compression : compress virtual memory pages to 2 KB or less to increase available memory on the host.

- System Swap : frees up RAM by moving memory pages from RAM to the host hard drive.

Note that the mechanisms mentioned above are used one after the other to make the best use of the RAM of your VMware ESXi host. Then, to be able to recover more and more RAM on it when necessary.

1. Memory Overcommitment

VMware ESXi is a hypervisor that allows excessive memory commitment.

Which means you can allocate more RAM to your virtual machines than the amount of RAM available on your VMware ESXi host.

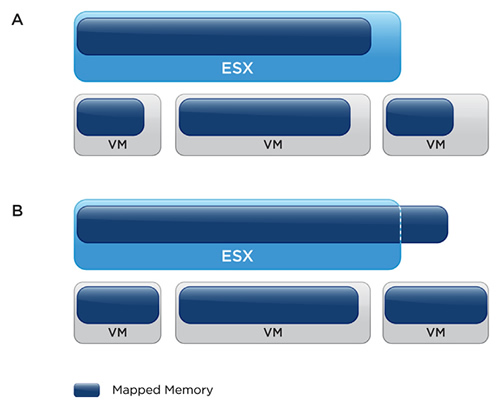

In the 1st diagram (A), the memory is undercommitted (memory-undercommitted).

Indeed, in the example below :

- the ESXi host has 16 GB of RAM

- 2 VMs have 4 GB of RAM allocated (configured)

- 1 VM has 8 GB of RAM allocated (configured)

If you start the 2 VMs with 4 GB of RAM allocated each, the allocated memory will be 8 GB.

Which is less than the amount of RAM (16 GB) available on this host.

The memory is therefore undercommitted (memory-undercommitted) and this therefore doesn't pose any problem.

In the 2nd scheme (B), it can happen that the memory is excessively committed (memory-overcommitted).

Indeed, in the example below :

- the ESXi host has 16 GB of RAM

- the 1st VM has 5 GB of RAM allocated (configured)

- the 2nd VM has 9 GB of RAM allocated (configured)

- the 3rd VM has 6 GB of RAM allocated (configured)

If you start the 3 virtual machines and they are therefore powered on simultaneously, you will therefore end up with a RAM allocation of 20 GB, while your VMware ESXi host really only has 20 GB.

If your virtual machines are not currently using a lot of RAM, it's quite possible that the memory is still undercommitted (memory-undercommitted). Which is no problem.

In fact, memory is overcommitted when the RAM consumed (actually used) by your virtual machines exceeds the amount of RAM physically available on your VMware ESXi host.

Memory overcommitment is a very nice feature of VMware ESXi because when you allocate RAM to virtual machines, the RAM is very often underutilized.

In effect :

- a virtual machine consumes a lot more resources when it starts, but a lot less afterwards.

- in general, only some of your virtual machines actually consume the amount of RAM you give them, but the others don't.

Especially since the excessive allocation of memory to a virtual machine may simply be due to a prerequisite requested by the manufacturer of such or such professional application. While this amount of RAM is not actually used in practice (or at least not most of the time). - it can happen that a virtual machine uses more RAM at some point, but this is often temporary.

As long as it doesn't happen at the same time, it's not a problem.

In short, if memory becomes excessively committed, VMware ESXi will reclaim unused RAM from your virtual machines and transfer it to the virtual machines that really need it.

This allows your virtual machines to continue to operate, but you will see later in this article that this will also mean a deterioration in their performance.

Remember that you can reserve RAM, manage RAM allocation priority, and limit the RAM usage of your virtual machines with the "Reservation", "Parts" and "Limit" settings to prevent your virtual machines from running out of RAM in the event that your VMware ESXi host's RAM is overcommitted.

To find out how, go to this page : VMware ESXi 6.7 - Configure your virtual machines settings - Memory (RAM).

However, be careful not to reserve too much RAM at the risk of having the opposite effect.

Indeed, the reserved memory (even when it's not used by the VM concerned) can't be recovered by VMware ESXi to transfer it to another virtual machine which may need more at some point.

Source : Memory Overcommitment - VMware Docs.

2. Transparent Page Sharing (TPS)

Using a proprietary Transparent Page Sharing (TPS) technique, VMware ESXi can efficiently use your physical RAM through its VMkernel.

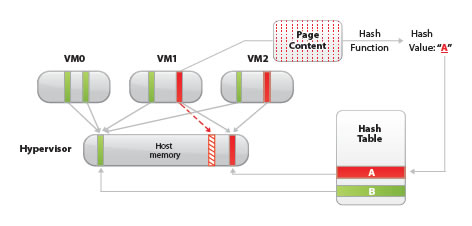

Indeed, when this TPS feature is enabled (which is not the case by default), the VMware ESXi VMkernel is able to detect memory pages that are identical (in green in the diagram below) and allows your virtual machine(s) to share them.

This saves a lot of memory if your virtual machines use the same operating system, the same applications and/or the same data (documents, images, ...).

Note that TPS can share memory pages on a single virtual machine as well as with several virtual machines whose workload is similar.

Of course, this sharing of memory pages is only possible in read-only mode, which means that if one of the virtual machines concerned must modify a memory page (in red in the diagram below), the VMware ESXi VMkernel will create a copy of this memory page (thanks to copy-on-write) and will modify this private copy.

This corresponds to the page hatched in red on the diagram below. Other virtual machines will continue to use the original read-only shared memory page.

Important : for security reasons, Transparent Page Sharing (TPS) is disabled by default since version 5.0 of VMware ESXi for TPS on a virtual machine and since version 6.0 for TPS between VMs.

Note that Transparent Page Sharing (TPS) is only supported for small memory pages (4 KB).

Indeed :

- the probability of finding 2 large memory pages (2 MB) is very low.

- performing a bitwise comparison of 2 2MB pages would use too much system resource compared to comparing 2 4KB pages.

For 2 MB pages, when necessary, VMware ESXi sometimes fragments these pages to generate 4 KB memory pages and thus be able to share them via TPS.

Finally, keep in mind that this Transparent Page Sharing (TPS), if enabled, will only occur when your VMware ESXi host's free RAM becomes too low.

This avoids consuming unnecessary system resources when your VMware ESXi host's RAM is currently underutilized.

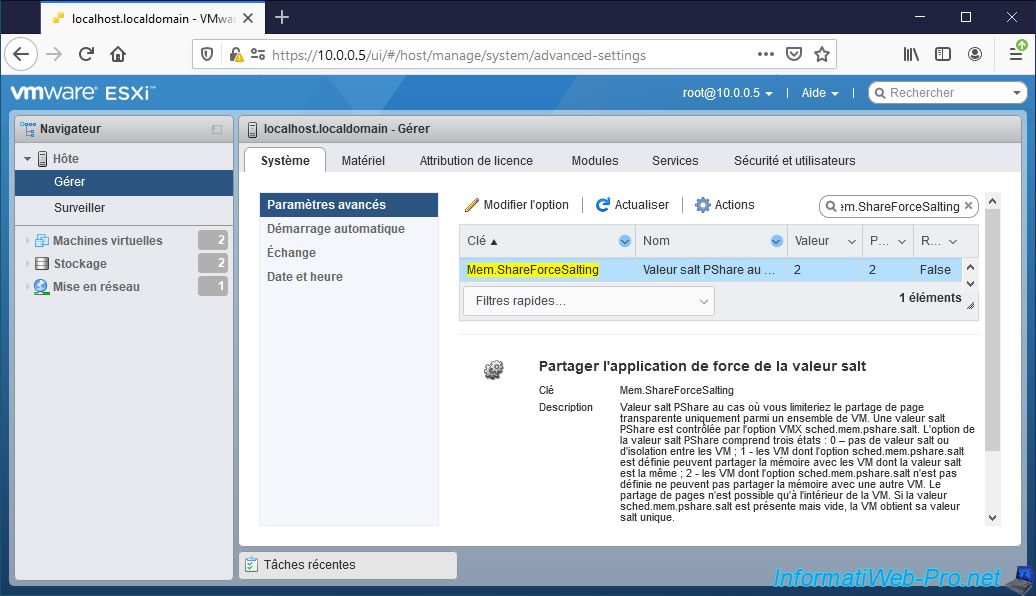

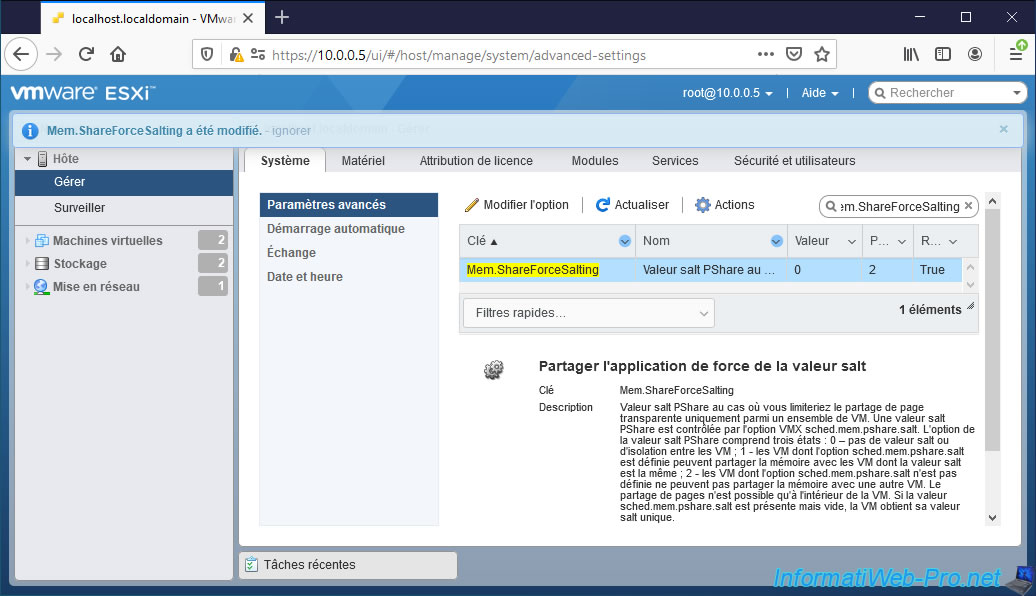

If you want to re-enable Transparent Page Sharing (TPS) on VMware ESXi, access its web interface and go to: Host -> Manage -> System -> Advanced Settings.

Then, look for the "Mem.ShareForceSalting" advanced setting.

As you can see in its description, sharing pages between VMs is only possible when the value of this setting is :

- 0 : to use the same behavior as in the past

- 1 : if you set the same salt for virtual machines that can share identical pages.

Otherwise, page sharing will not be allowed between VMs. Which is the default case (2 = disabled).

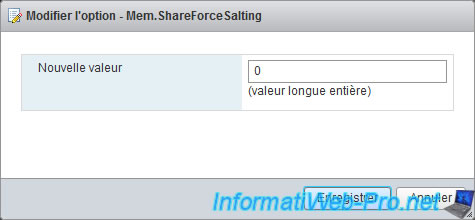

Select this advanced setting and click "Change option".

Specify the value "0" to allow Transparent Page Sharing (TPS) between all virtual machines on your VMware ESXi host and click Save.

The "Successfully changed Mem.ShareForceSalting" message appears.

Important : to have Transparent Page Sharing (TPS) enabled on currently powered-on virtual machines, you will need to :

- switch them off, then start them again.

- or migrate them to another VMware ESXi host and then migrate them to the source VMware ESXi host, if you wish to do so without shutting down your virtual machines.

Sources :

- Memory Sharing - VMware Docs

- Security considerations and disallowing inter-Virtual Machine Transparent Page Sharing (2080735)

- Transparent Page Sharing (TPS) in hardware MMU systems (1021095)

- Advanced Memory Attributes - VMware Docs

3. Memory Balloon Driver

Thanks to the memory ballooning driver (vmmemctl), your VMware ESXi hypervisor will be able to recover RAM by asking your virtual machines to free the memory pages that they don't use or more at the moment.

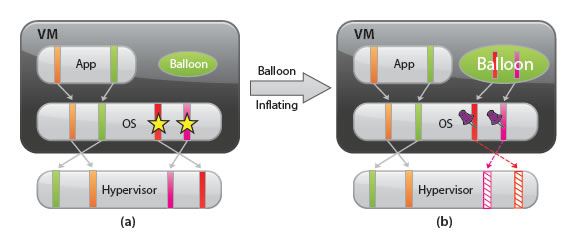

In diagram "a" below :

- the pages used by the virtual machine are those in green and orange.

These pages are currently used by an application launched in this virtual machine. - the pages in red and pink marked with stars are pages that had been used by this virtual machine in the past, but which are "free" in the RAM of this virtual machine.

In figure "b" below, this shows what happens when VMware ESXi asks this virtual machine which memory pages it can reclaim :

- the "balloon" driver (vmmemctl) installed in the virtual machine "inflates" itself by marking the pages that are unused by the guest operating system of this virtual machine.

- once these pages are marked, this "balloon" driver (vmmemctl) tells VMware ESXi which memory page numbers it can fetch.

- VMware ESXi can therefore easily recover these memory pages from the physical RAM since neither the "balloon" driver nor the operating system uses the content of these pages.

- if the guest operating system of the virtual machine tries to reaccess these memory pages, VMware ESXi will allocate new pages of physical RAM and will point the old memory pages of this VM to the new memory pages of physical RAM.

Then, VMware ESXi will redistribute the reclaimed RAM to the virtual machines that currently need it.

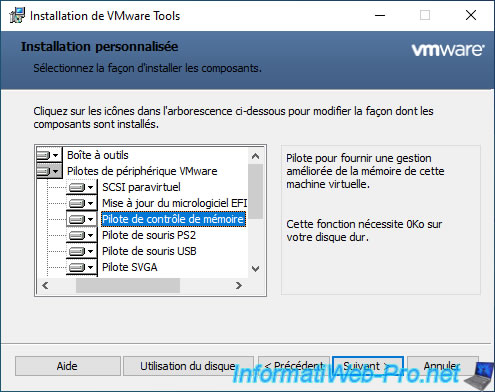

Important : in order for VMware ESXi to be able to recover the memory not used by your virtual machines, it's imperative that the VMware Tools are installed on them.

Indeed, when you install the VMware Tools in a virtual machine, you will see that a memory control driver is installed.

It's this driver that is used for the memory inflation (ballooning) described above.

Without this driver, VMware ESXi will not be able to reclaim unused memory from the guest OS since VMware ESXi can't see its contents (from a technical point of view).

Note that you can change the amount of RAM (in MB) that can be claimed by this driver if desired by configuring the "sched.mem.maxmemctl" setting on the desired virtual machine.

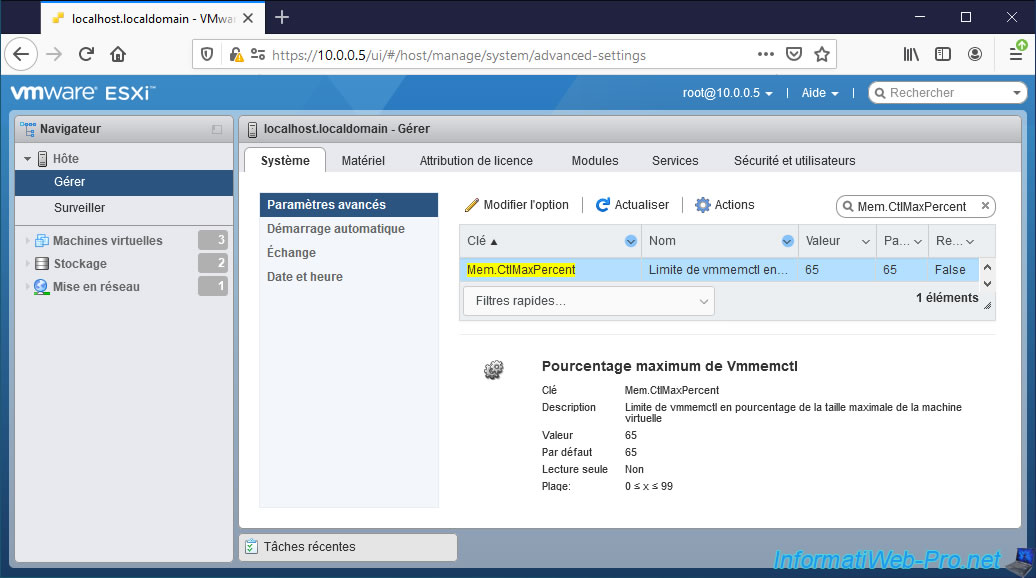

You can also adjust the amount of RAM this driver can reclaim per virtual machine by modifying the "Mem.CtlMaxPercent" advanced setting.

To do this, go to "Host -> Manage -> System -> Advanced settings" and look for the "Mem.CtlMaxPercent" setting.

Then, select it and click : Edit option.

Specify the desired percentage (default : 65) and click Save.

Finally, keep in mind that this memory inflation (ballooning) will only be used when the available RAM is too low on your VMware ESXi host despite the use of transparent page sharing (TPS).

Source : Memory Balloon Driver - VMware Docs.

4. Compression

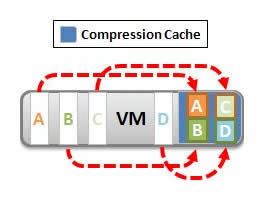

If the previous mechanisms (TPS and ballooning) don't recover enough RAM on your VMware ESXi host, your hypervisor will attempt to compress the virtual memory pages.

Specifically, VMware ESXi will attempt to compress pages to 2KB or less and store these in the affected VM's compression cache.

Note that this RAM compression is enabled by default on VMware ESXi and that accessing compressed memory is faster than accessing swapped memory. Indeed, the memory which is permuted (swap) is stored on the hard disk.

Source : Memory Compression - VMware Docs.

5. System Swap

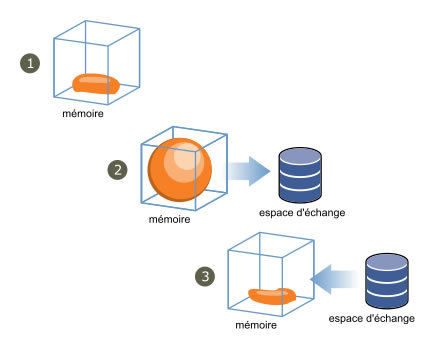

If the various memory management mechanisms explained previously don't recover enough RAM on your VMware ESXi hypervisor, then it will use system swapping.

On VMware ESXi, System Swap involves moving memory pages from RAM to a swap file stored on the host hard drive.

Warning : this means that performance will be greatly degraded since access to a hard disk is much slower than access to RAM. Especially since VMware ESXi will move memory pages of the virtual machine chosen randomly.

This can significantly slow down the virtual machine depending on the pages that have been moved.

That said, according to VMware, it's not technically possible to know what is in the pages of a virtual machine. Hence the random choice of these memory pages to quickly free up RAM.

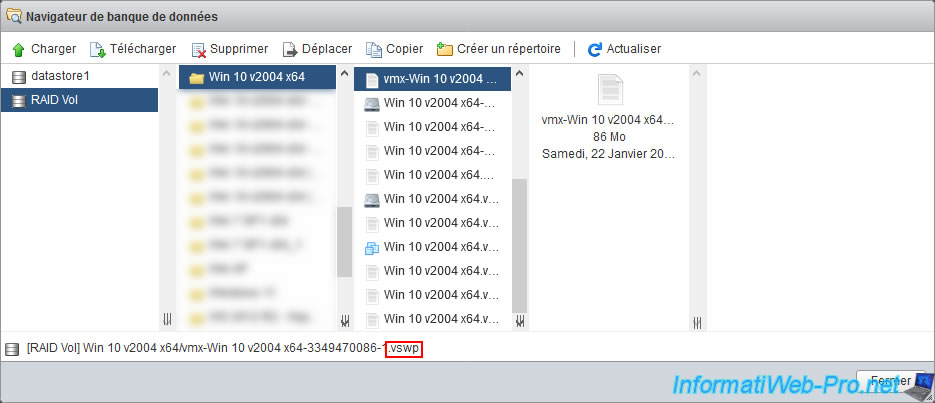

More precisely, it's the ".wsp" file created at the start of each virtual machine and erased when it's stopped.

By default, this file is stored on the hard disk of the host in the folder of the virtual machine concerned.

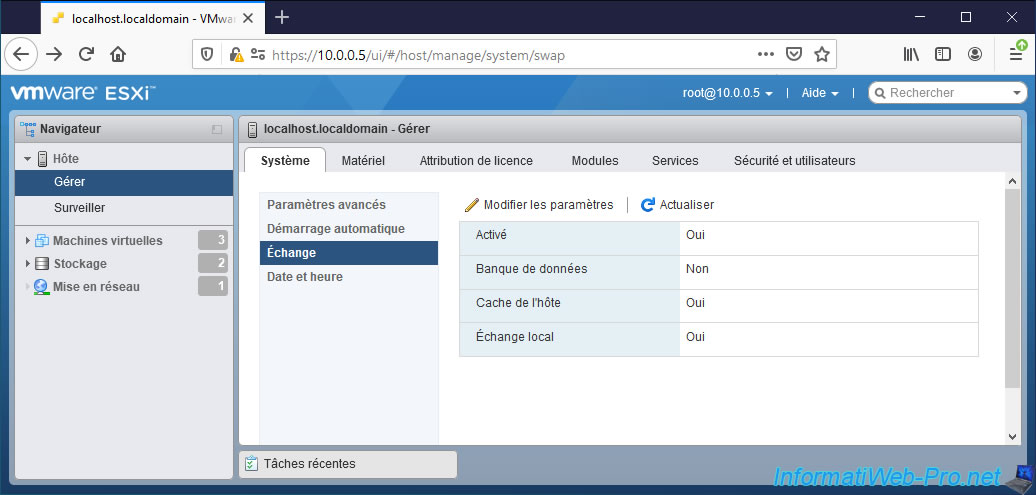

Note that you can change the default location of this swap file (.vswp) globally by going to : Host -> Manage -> System -> Swap.

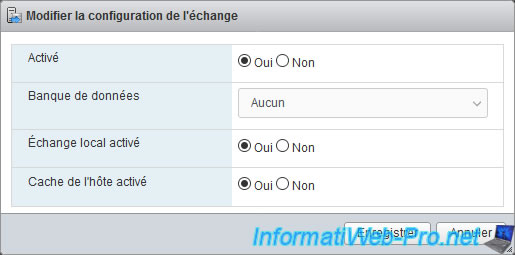

Then, click on : Edit settings.

In the "Edit swap configuration" window that appears, you will be able to :

- Enabled : enable or not the system swap

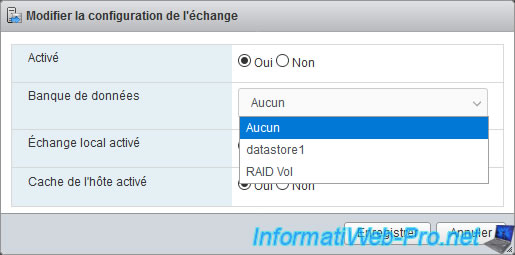

- Datastore : choose on which datastore the swap file should be created.

- Local swap enabled : enable or not the use of the local cache.

- Host cache enabled : enable or not the use of host cache.

For the "Datastore" option, you can, for example, select a datastore corresponding to faster storage (such as an SSD) instead of a classic hard disk so that access to swap files (.vswp) is a bit faster.

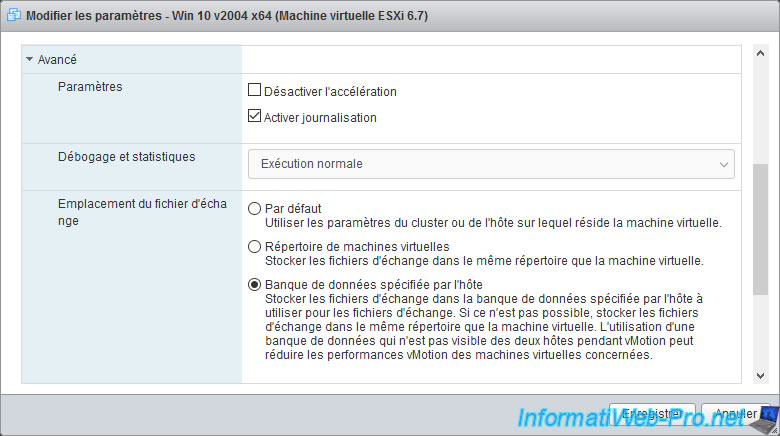

Note that you can also change this behavior for each virtual machine individually by selecting it, then clicking on : Edit.

Then, go to the "VM Options" tab.

In the "Advanced" section, you will find a "Swap file location" setting for which you can choose between :

- Default : to use the setting defined on the cluster (if applicable) or on the VMware ESXi host where this virtual machine is located.

- Virtual machine directory : the swap file (.vswp) will be created in the virtual machine folder.

Which is the default case on VMware ESXi. - Datastore specified by host : if a datastore has been selected in the "Swap" setting (cited earlier) of your VMware ESXi host, the swap file (.vswp) for that virtual machine will be created on the datastore selected in host settings.

Otherwise, the swap file (.vswp) will be stored in the virtual machine folder.

Warning : if you have several VMware ESXi hosts and you want to be able to migrate your virtual machines from one VMware ESXi host to another via vSphere vMotion, it's strongly recommended to store the swap file (.vswp) in a datastore visible by the 2 VMware ESXi hosts.

Otherwise, vSphere vMotion performance will be degraded because pages swapped with a swap file will need to be transferred over the network.

As a reminder, this RAM recovery mechanism is only used as a last resort since this will greatly degrade the performance of the virtual machines concerned.

The goal is to prevent a virtual machine or your host from crashing due to lack of RAM on it.

Sources :

- About System Swap - VMware Docs

- Configure Virtual Machine Swapfile Properties for the Host - VMware Docs

- Swap File Location - VMware Docs

To learn even more about memory management on VMware ESXi, see VMware's official "Understanding Memory Resource Management in VMware® ESX™ Server" PDF.

Share this tutorial

To see also

-

VMware 4/14/2023

VMware ESXi 6.7 - Best practices for creating a VM

-

VMware 2/17/2023

VMware ESXi 6.7 - Configure your virtual machines settings

-

VMware 6/2/2023

VMware ESXi 6.7 - Connect a smart card reader to a VM

-

VMware 10/19/2022

VMware ESXi 6.7 - DirectPath I/O (PCI passthrough)

You must be logged in to post a comment